diff --git a/TrainGLaDOS_NeMo.ipynb b/TrainGLaDOS_NeMo.ipynb

new file mode 100644

index 0000000..fc23ff2

--- /dev/null

+++ b/TrainGLaDOS_NeMo.ipynb

@@ -0,0 +1,401 @@

+{

+ "cells": [

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "b276bbe0",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "#!sudo apt install sox libsndfile1 ffmpeg\n",

+ "#!pip3 install wget unidecode pynini==2.1.4\n",

+ "#!pip3 install git+https://github.com/NVIDIA/NeMo.git@v1.12.0#egg=nemo_toolkit[all]\n",

+ "#!wget https://raw.githubusercontent.com/NVIDIA/NeMo/main/nemo_text_processing/install_pynini.sh\n",

+ "#!bash install_pynini.sh"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "c3b7b7e3",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import requests\n",

+ "from multiprocessing import cpu_count\n",

+ "from multiprocessing.pool import ThreadPool\n",

+ "import shutil\n",

+ "import os\n",

+ "from bs4 import BeautifulSoup\n",

+ "import soundfile as sf\n",

+ "import string\n",

+ "import json\n",

+ "import re\n",

+ "import num2words\n",

+ "\n",

+ "class bcolors:\n",

+ " HEADER = '\\033[95m'\n",

+ " OKBLUE = '\\033[94m'\n",

+ " OKCYAN = '\\033[96m'\n",

+ " OKGREEN = '\\033[92m'\n",

+ " WARNING = '\\033[93m'\n",

+ " FAIL = '\\033[91m'\n",

+ " ENDC = '\\033[0m'\n",

+ " BOLD = '\\033[1m'\n",

+ " UNDERLINE = '\\033[4m'\n",

+ "\n",

+ "blocklist = [\"potato\", \"_ding_\", \"00_part1_entry-6\"]\n",

+ "audio_dir = 'audio'\n",

+ "download_threads = 64\n",

+ "\n",

+ "def prep(args, overwrite=False):\n",

+ " already_exists = os.path.exists(audio_dir)\n",

+ " \n",

+ " if already_exists and not overwrite:\n",

+ " print(\"Data already downloaded\")\n",

+ " return\n",

+ " \n",

+ " if already_exists:\n",

+ " print(\"Deleting previously downloaded audio\")\n",

+ " shutil.rmtree(audio_dir)\n",

+ "\n",

+ " os.mkdir(audio_dir)\n",

+ " download_parallel(args)\n",

+ "\n",

+ "def remove_punctuation(str):\n",

+ " return str.translate(str.maketrans('', '', string.punctuation))\n",

+ " \n",

+ "def audio_duration(fn):\n",

+ " f = sf.SoundFile(fn)\n",

+ " return f.frames / f.samplerate\n",

+ "\n",

+ "def download_file(args):\n",

+ " url, filename = args[0], args[1]\n",

+ "\n",

+ " try:\n",

+ " response = requests.get(url)\n",

+ " open(os.path.join(audio_dir, filename), \"wb\").write(response.content)\n",

+ " return filename, True\n",

+ " except:\n",

+ " return filename, False\n",

+ "\n",

+ "def download_parallel(args):\n",

+ " results = ThreadPool(download_threads).imap_unordered(download_file, args)\n",

+ " for result in results:\n",

+ " if result[1]:\n",

+ " print(bcolors.OKGREEN + \"[\" + u'\\u2713' + \"] \" + bcolors.ENDC + result[0])\n",

+ " else:\n",

+ " print(bcolors.FAIL + \"[\" + u'\\u2715' + \"] \" + bcolors.ENDC + result[0])\n",

+ "\n",

+ "def main():\n",

+ " r = requests.get(\"https://theportalwiki.com/wiki/GLaDOS_voice_lines\")\n",

+ "\n",

+ " urls = []\n",

+ " filenames = []\n",

+ " texts = []\n",

+ "\n",

+ " soup = BeautifulSoup(r.text.encode('utf-8').decode('ascii', 'ignore'), 'html.parser')\n",

+ " for link_item in soup.find_all('a'):\n",

+ " url = link_item.get(\"href\", None)\n",

+ " if url:\n",

+ " if \"https:\" in url and \".wav\" in url:\n",

+ " list_item = link_item.find_parent(\"li\")\n",

+ " ital_item = list_item.find_all('i')\n",

+ " if ital_item:\n",

+ " text = ital_item[0].text\n",

+ " text = text.replace('\"', '')\n",

+ " filename = url[url.rindex(\"/\")+1:]\n",

+ "\n",

+ " if \"[\" not in text and \"]\" not in text and \"$\" not in text:\n",

+ " if url not in urls:\n",

+ " for s in blocklist:\n",

+ " if s in url:\n",

+ " break\n",

+ " else:\n",

+ " urls.append(url)\n",

+ " filenames.append(filename)\n",

+ " text = text.replace('*', '')\n",

+ " text = re.sub(r\"(\\d+)\", lambda x: num2words.num2words(int(x.group(0))), text)\n",

+ " texts.append(text)\n",

+ "\n",

+ " print(\"Found \" + str(len(urls)) + \" urls\")\n",

+ "\n",

+ " args = zip(urls, filenames)\n",

+ "\n",

+ " prep(args)\n",

+ " \n",

+ " total_audio_time = 0\n",

+ " outFile=open(os.path.join(audio_dir, \"manifest.json\"), 'w')\n",

+ " for i in range(len(urls)):\n",

+ " item = {}\n",

+ " text = texts[i]\n",

+ " filename = filenames[i]\n",

+ " item[\"audio_filepath\"] = os.path.join(audio_dir, filename)\n",

+ " #item[\"text_normalized\"] = text\n",

+ " #item[\"text_no_preprocessing\"] = text\n",

+ " item[\"text\"] = text.lower()\n",

+ " item[\"duration\"] = audio_duration(os.path.join(audio_dir, filename))\n",

+ " total_audio_time = total_audio_time + item[\"duration\"]\n",

+ " outFile.write(json.dumps(item, ensure_ascii=True, sort_keys=True) + \"\\n\")\n",

+ " \n",

+ " outFile.close()\n",

+ " print(str(total_audio_time/60.0) + \" min\")\n",

+ "\n",

+ "main()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "82a7d268",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!head -n 1 ./audio/manifest.json"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "832fcd67",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!cat ./audio/manifest.json | tail -n 2 > ./manifest_validation.json\n",

+ "!cat ./audio/manifest.json | head -n -2 > ./manifest_train.json"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "e56749bf",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "home_path = !(echo $HOME)\n",

+ "home_path = home_path[0]\n",

+ "print(home_path)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "9bf1fb18",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "import json\n",

+ "\n",

+ "import torch\n",

+ "import IPython.display as ipd\n",

+ "from matplotlib.pyplot import imshow\n",

+ "from matplotlib import pyplot as plt\n",

+ "\n",

+ "from nemo.collections.tts.models import FastPitchModel\n",

+ "FastPitchModel.from_pretrained(\"tts_en_fastpitch\")\n",

+ "\n",

+ "from pathlib import Path\n",

+ "nemo_files = [p for p in Path(f\"{home_path}/.cache/torch/NeMo/\").glob(\"**/tts_en_fastpitch_align.nemo\")]\n",

+ "print(f\"Copying {nemo_files[0]} to ./\")\n",

+ "Path(\"./tts_en_fastpitch_align.nemo\").write_bytes(nemo_files[0].read_bytes())"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "81741e30",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!wget https://raw.githubusercontent.com/nvidia/NeMo/v1.12.0/examples/tts/fastpitch_finetune.py\n",

+ "!wget https://raw.githubusercontent.com/NVIDIA/NeMo/v1.12.0/examples/tts/hifigan_finetune.py\n",

+ " \n",

+ "!mkdir -p conf\n",

+ "!cd conf \\\n",

+ "&& wget https://raw.githubusercontent.com/nvidia/NeMo/v1.12.0/examples/tts/conf/fastpitch_align_v1.05.yaml \\\n",

+ "&& wget https://raw.githubusercontent.com/NVIDIA/NeMo/v1.12.0/examples/tts/conf/hifigan/hifigan.yaml \\\n",

+ "&& cd .."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "64b51e94",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# additional files\n",

+ "!mkdir -p tts_dataset_files && cd tts_dataset_files \\\n",

+ "&& wget https://raw.githubusercontent.com/NVIDIA/NeMo/v1.12.0/scripts/tts_dataset_files/cmudict-0.7b_nv22.08 \\\n",

+ "&& wget https://raw.githubusercontent.com/NVIDIA/NeMo/v1.12.0/scripts/tts_dataset_files/heteronyms-052722 \\\n",

+ "&& wget https://raw.githubusercontent.com/NVIDIA/NeMo/v1.12.0/nemo_text_processing/text_normalization/en/data/whitelist/lj_speech.tsv \\\n",

+ "&& cd .."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "6b92a469",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!(python3 fastpitch_finetune.py --config-name=fastpitch_align_v1.05.yaml \\\n",

+ " train_dataset=./manifest_train.json \\\n",

+ " validation_datasets=./manifest_validation.json \\\n",

+ " sup_data_path=./fastpitch_sup_data \\\n",

+ " phoneme_dict_path=tts_dataset_files/cmudict-0.7b_nv22.08 \\\n",

+ " heteronyms_path=tts_dataset_files/heteronyms-052722 \\\n",

+ " whitelist_path=tts_dataset_files/lj_speech.tsv \\\n",

+ " exp_manager.exp_dir=./glados_out \\\n",

+ " +init_from_nemo_model=./tts_en_fastpitch_align.nemo \\\n",

+ " trainer.max_epochs=100 \\\n",

+ " trainer.check_val_every_n_epoch=25 \\\n",

+ " model.train_ds.dataloader_params.batch_size=12 model.validation_ds.dataloader_params.batch_size=12 \\\n",

+ " model.n_speakers=1 model.pitch_mean=121.9 model.pitch_std=23.1 \\\n",

+ " model.pitch_fmin=30 model.pitch_fmax=512 model.optim.lr=2e-4 \\\n",

+ " ~model.optim.sched model.optim.name=adam trainer.devices=1 trainer.strategy=null \\\n",

+ " +model.text_tokenizer.add_blank_at=true \\\n",

+ ")"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "780dba9a",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from nemo.collections.tts.models import HifiGanModel\n",

+ "from nemo.collections.tts.models import FastPitchModel\n",

+ "\n",

+ "vocoder = HifiGanModel.from_pretrained(\"tts_hifigan\")\n",

+ "vocoder = vocoder.eval().cuda()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "831239e2",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "def infer(spec_gen_model, vocoder_model, str_input, speaker=None):\n",

+ " \"\"\"\n",

+ " Synthesizes spectrogram and audio from a text string given a spectrogram synthesis and vocoder model.\n",

+ " \n",

+ " Args:\n",

+ " spec_gen_model: Spectrogram generator model (FastPitch in our case)\n",

+ " vocoder_model: Vocoder model (HiFiGAN in our case)\n",

+ " str_input: Text input for the synthesis\n",

+ " speaker: Speaker ID\n",

+ " \n",

+ " Returns:\n",

+ " spectrogram and waveform of the synthesized audio.\n",

+ " \"\"\"\n",

+ " with torch.no_grad():\n",

+ " parsed = spec_gen_model.parse(str_input)\n",

+ " if speaker is not None:\n",

+ " speaker = torch.tensor([speaker]).long().to(device=spec_gen_model.device)\n",

+ " spectrogram = spec_gen_model.generate_spectrogram(tokens=parsed, speaker=speaker)\n",

+ " audio = vocoder_model.convert_spectrogram_to_audio(spec=spectrogram)\n",

+ " \n",

+ " if spectrogram is not None:\n",

+ " if isinstance(spectrogram, torch.Tensor):\n",

+ " spectrogram = spectrogram.to('cpu').numpy()\n",

+ " if len(spectrogram.shape) == 3:\n",

+ " spectrogram = spectrogram[0]\n",

+ " if isinstance(audio, torch.Tensor):\n",

+ " audio = audio.to('cpu').numpy()\n",

+ " return spectrogram, audio\n",

+ "\n",

+ "def get_best_ckpt_from_last_run(\n",

+ " base_dir, \n",

+ " new_speaker_id, \n",

+ " duration_mins, \n",

+ " mixing_enabled, \n",

+ " original_speaker_id, \n",

+ " model_name=\"FastPitch\"\n",

+ " ): \n",

+ " mixing = \"no_mixing\" if not mixing_enabled else \"mixing\"\n",

+ " \n",

+ " d = \"glados_out\"\n",

+ " \n",

+ " exp_dirs = list([i for i in (Path(base_dir) / d / model_name).iterdir() if i.is_dir()])\n",

+ " last_exp_dir = sorted(exp_dirs)[-1]\n",

+ " \n",

+ " last_checkpoint_dir = last_exp_dir / \"checkpoints\"\n",

+ " \n",

+ " last_ckpt = list(last_checkpoint_dir.glob('*-last.ckpt'))\n",

+ "\n",

+ " if len(last_ckpt) == 0:\n",

+ " raise ValueError(f\"There is no last checkpoint in {last_checkpoint_dir}.\")\n",

+ " \n",

+ " return str(last_ckpt[0])"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "2fad0610",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "new_speaker_id = 6097\n",

+ "duration_mins = 5\n",

+ "mixing = False\n",

+ "original_speaker_id = \"ljspeech\"\n",

+ "\n",

+ "last_ckpt = get_best_ckpt_from_last_run(\"./\", new_speaker_id, duration_mins, mixing, original_speaker_id)\n",

+ "print(last_ckpt)\n",

+ "\n",

+ "spec_model = FastPitchModel.load_from_checkpoint(last_ckpt)\n",

+ "spec_model.eval().cuda()\n",

+ "\n",

+ "# Only need to set speaker_id if there is more than one speaker\n",

+ "speaker_id = None\n",

+ "if mixing:\n",

+ " speaker_id = 1\n",

+ "\n",

+ "num_val = 2 # Number of validation samples\n",

+ "val_records = []\n",

+ "with open(\"manifest_validation.json\", \"r\") as f:\n",

+ " for i, line in enumerate(f):\n",

+ " val_records.append(json.loads(line))\n",

+ " if len(val_records) >= num_val:\n",

+ " break\n",

+ " \n",

+ "for val_record in val_records:\n",

+ " print(\"Real validation audio\")\n",

+ " ipd.display(ipd.Audio(val_record['audio_filepath'], rate=22050))\n",

+ " print(f\"SYNTHESIZED FOR -- Speaker: {new_speaker_id} | Dataset size: {duration_mins} mins | Mixing:{mixing} | Text: {val_record['text']}\")\n",

+ " spec, audio = infer(spec_model, vocoder, val_record['text'], speaker=speaker_id)\n",

+ " ipd.display(ipd.Audio(audio, rate=22050))\n",

+ " %matplotlib inline\n",

+ " imshow(spec, origin=\"lower\", aspect=\"auto\")\n",

+ " plt.show()"

+ ]

+ }

+ ],

+ "metadata": {

+ "kernelspec": {

+ "display_name": "Python 3 (ipykernel)",

+ "language": "python",

+ "name": "python3"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.8.10"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 5

+}

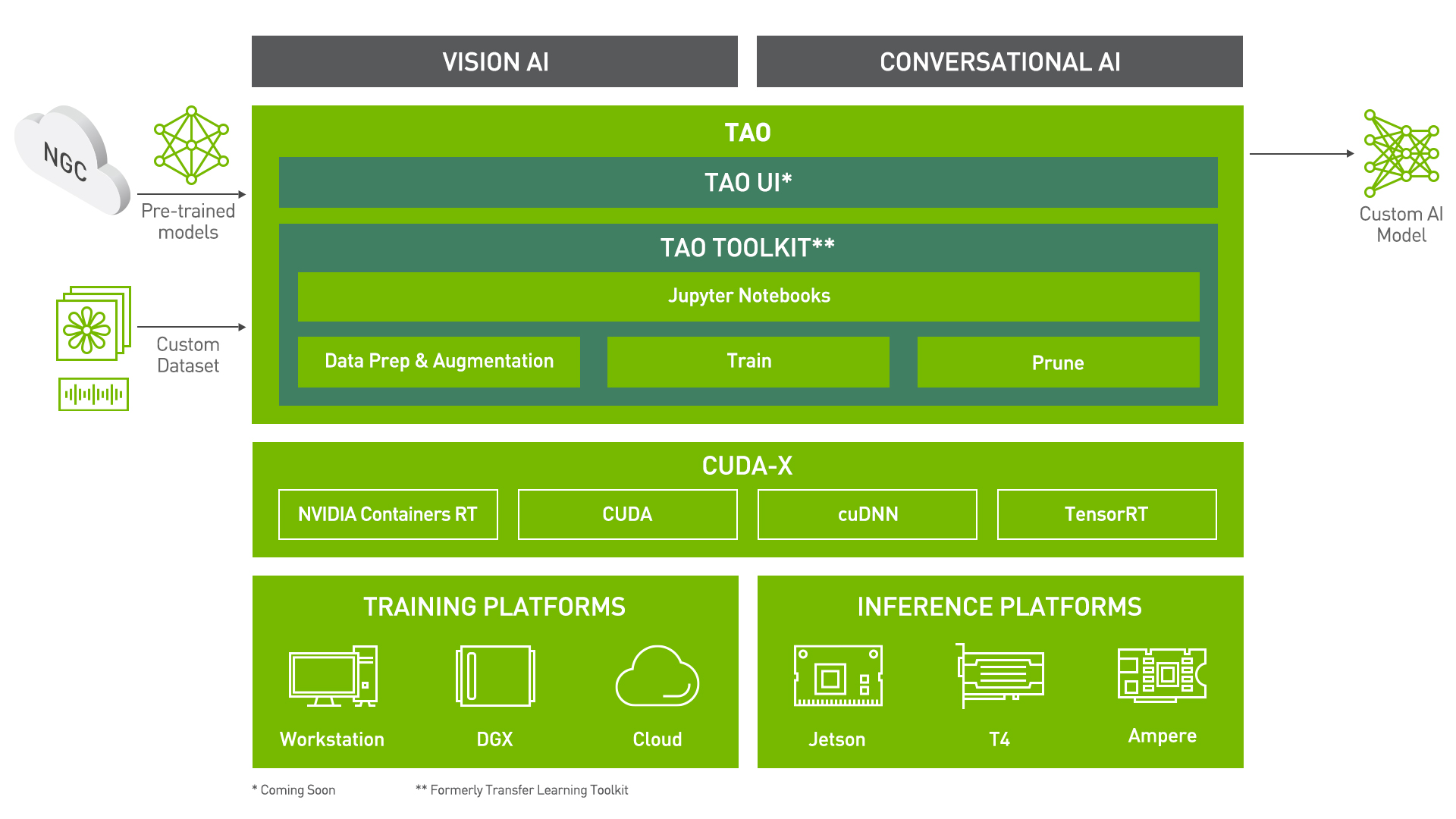

diff --git a/TrainGLaDOS_TAO.ipynb b/TrainGLaDOS_TAO.ipynb

new file mode 100644

index 0000000..efd40d7

--- /dev/null

+++ b/TrainGLaDOS_TAO.ipynb

@@ -0,0 +1,1259 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "# Train Adapt Optimize (TAO) Toolkit"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "Train Adapt Optimize (TAO) Toolkit is a python based AI toolkit for taking purpose-built pre-trained AI models and customizing them with your own data. \n",

+ "\n",

+ "Transfer learning extracts learned features from an existing neural network to a new one. Transfer learning is often used when creating a large training dataset is not feasible. \n",

+ "\n",

+ "Developers, researchers and software partners building intelligent AI apps and services, can bring their own data to fine-tune pre-trained models instead of going through the hassle of training from scratch."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ ""

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "The goal of this toolkit is to reduce that 80 hour workload to an 8 hour workload, which can enable data scientist to have considerably more train-test iterations in the same time frame.\n",

+ "\n",

+ "Let's see this in action with a use case for Speech Synthesis!"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Text to Speech"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "Text to Speech (TTS) is often the last step in building a Conversational AI model. A TTS model converts text into audible speech. The main objective is to synthesize reasonable and natural speech for given text. Since there are no universal standard to measure quality of synthesized speech, you will need to listen to some inferred speech to tell whether a TTS model is well trained.\n",

+ "\n",

+ "In TAO Toolkit, TTS is made up with two models: [FastPitch](https://arxiv.org/pdf/2006.06873.pdf) for spectrogram generation and [HiFiGAN](https://arxiv.org/pdf/2010.05646.pdf) as vocoder."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "---\n",

+ "## Let's Dig in: TTS using TAO\n",

+ "\n",

+ "This notebook assumes that you are already familiar with TTS Training using TAO, as described in the [text-to-speech-training](https://catalog.ngc.nvidia.com/orgs/nvidia/teams/tao/resources/texttospeech_notebook) notebook, and that you have a pretrained TTS model."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### Installing and setting up TAO\n",

+ "\n",

+ "For ease of use, please install TAO inside a python virtual environment. We recommend performing this step first and then launching the notebook from the virtual environment.\n",

+ "\n",

+ "In addition to installing TAO python package, please make sure of the following software requirements:\n",

+ "\n",

+ "1. python 3.6.9\n",

+ "2. docker-ce > 19.03.5\n",

+ "3. docker-API 1.40\n",

+ "4. nvidia-container-toolkit > 1.3.0-1\n",

+ "5. nvidia-container-runtime > 3.4.0-1\n",

+ "6. nvidia-docker2 > 2.5.0-1\n",

+ "7. nvidia-driver >= 455.23"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "Running the cell below installs TAO Toolkit."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "! pip3 install wheel\n",

+ "! pip3 install --force-reinstall nvidia-pyindex\n",

+ "! pip3 install --force-reinstall nvidia-tao\n",

+ "#! sudo apt install --reinstall nvidia-container-toolkit nvidia-container-runtime nvidia-docker2"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!pip3 install librosa\n",

+ "!pip3 install matplotlib\n",

+ "!pip3 install --upgrade hydra-core hydra\n",

+ "#! pip install numba==0.48\n",

+ "#! pip install librosa==0.7\n",

+ "#! pip install soundfile"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "After installing TAO, the next step is to setup the mounts for TAO. The TAO launcher uses docker containers under the hood, and **for our data and results directory to be visible to the docker, they need to be mapped**. The launcher can be configured using the config file `~/.tao_mounts.json`. Apart from the mounts, you can also configure additional options like the Environment Variables and amount of Shared Memory available to the TAO launcher.

\n",

+ "\n",

+ "Replace the variables FIXME with the required paths enclosed in `\"\"` as a string.\n",

+ "\n",

+ "`IMPORTANT NOTE:` The code below creates a sample `~/.tao_mounts.json` file. Here, we can map directories in which we save the data, specs, results and cache. You should configure it for your specific case so these directories are correctly visible to the docker container."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# please define these paths on your local host machine\n",

+ "import os\n",

+ "from pathlib import Path\n",

+ "\n",

+ "os.environ[\"HOST_DATA_DIR\"] = \"/home/davesarmoury/ws/glados_ws/TAO/tmp/data\"\n",

+ "os.environ[\"HOST_SPECS_DIR\"] = \"/home/davesarmoury/ws/glados_ws/TAO/tmp/specs\"\n",

+ "os.environ[\"HOST_RESULTS_DIR\"] = \"/home/davesarmoury/ws/glados_ws/TAO/tmp/results\""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "! mkdir -p $HOST_DATA_DIR\n",

+ "! mkdir -p $HOST_SPECS_DIR\n",

+ "! mkdir -p $HOST_RESULTS_DIR"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Mapping up the local directories to the TAO docker.\n",

+ "import json\n",

+ "import os\n",

+ "mounts_file = os.path.expanduser(\"~/.tao_mounts.json\")\n",

+ "tlt_configs = {\n",

+ " \"Mounts\":[\n",

+ " {\n",

+ " \"source\": os.environ[\"HOST_DATA_DIR\"],\n",

+ " \"destination\": \"/data\"\n",

+ " },\n",

+ " {\n",

+ " \"source\": os.environ[\"HOST_SPECS_DIR\"],\n",

+ " \"destination\": \"/specs\"\n",

+ " },\n",

+ " {\n",

+ " \"source\": os.environ[\"HOST_RESULTS_DIR\"],\n",

+ " \"destination\": \"/results\"\n",

+ " },\n",

+ " {\n",

+ " \"source\": os.path.expanduser(\"~/.cache\"),\n",

+ " \"destination\": \"/root/.cache\"\n",

+ " },\n",

+ " ],\n",

+ " \"DockerOptions\": {\n",

+ " \"shm_size\": \"16G\",\n",

+ " \"ulimits\": {\n",

+ " \"memlock\": -1,\n",

+ " \"stack\": 67108864\n",

+ " }\n",

+ " }\n",

+ "}\n",

+ "# Writing the mounts file.\n",

+ "with open(mounts_file, \"w\") as mfile:\n",

+ " json.dump(tlt_configs, mfile, indent=4)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "You can check the docker image versions and the tasks that perform. You can also check this out with a `tao --help` or"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "! tao info --verbose"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### Set Relevant Paths"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# NOTE: The following paths are set from the perspective of the TAO Docker.\n",

+ "\n",

+ "import os\n",

+ "\n",

+ "# The data is saved here\n",

+ "DATA_DIR = \"/data\"\n",

+ "SPECS_DIR = \"/specs\"\n",

+ "RESULTS_DIR = \"/results\"\n",

+ "\n",

+ "# Set your encryption key, and use the same key for all commands\n",

+ "KEY = 'tlt_encode'\n",

+ "\n",

+ "os.environ[\"DATA_DIR\"] = DATA_DIR\n",

+ "os.environ[\"SPECS_DIR\"] = SPECS_DIR\n",

+ "os.environ[\"RESULTS_DIR\"] = RESULTS_DIR"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "Now that everything is setup, we would like to take a bit of time to explain the tao interface for ease of use. The command structure can be broken down as follows: `tao `

\n",

+ "\n",

+ "Let's see this in further detail."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "\n",

+ "### Downloading Specs\n",

+ "TAO's Conversational AI Toolkit works off of spec files which make it easy to edit hyperparameters on the fly. We can proceed to downloading the spec files. The user may choose to modify/rewrite these specs, or even individually override them through the launcher. You can download the default spec files by using the `download_specs` command.

\n",

+ "\n",

+ "The -o argument indicating the folder where the default specification files will be downloaded, and -r that instructs the script where to save the logs. **Make sure the -o points to an empty folder!**"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# download spec files for FastPitch\n",

+ "!tao spectro_gen download_specs \\\n",

+ " -r $RESULTS_DIR/spectro_gen \\\n",

+ " -o $SPECS_DIR/spectro_gen"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "scrolled": true

+ },

+ "outputs": [],

+ "source": [

+ "# download spec files for HiFiGAN\n",

+ "!tao vocoder download_specs \\\n",

+ " -r $RESULTS_DIR/vocoder \\\n",

+ " -o $SPECS_DIR/vocoder"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import requests\n",

+ "from multiprocessing import cpu_count\n",

+ "from multiprocessing.pool import ThreadPool\n",

+ "import shutil\n",

+ "import os\n",

+ "from bs4 import BeautifulSoup\n",

+ "import soundfile as sf\n",

+ "import string\n",

+ "import json\n",

+ "import re\n",

+ "import num2words\n",

+ "\n",

+ "class bcolors:\n",

+ " HEADER = '\\033[95m'\n",

+ " OKBLUE = '\\033[94m'\n",

+ " OKCYAN = '\\033[96m'\n",

+ " OKGREEN = '\\033[92m'\n",

+ " WARNING = '\\033[93m'\n",

+ " FAIL = '\\033[91m'\n",

+ " ENDC = '\\033[0m'\n",

+ " BOLD = '\\033[1m'\n",

+ " UNDERLINE = '\\033[4m'\n",

+ "\n",

+ "blocklist = [\"potato\", \"_ding_\", \"00_part1_entry-6\", \"_escape_\"]\n",

+ "audio_dir = 'tmp/data/GLaDOS'\n",

+ "download_threads = 64\n",

+ "\n",

+ "def prep(args, overwrite=False):\n",

+ " already_exists = os.path.exists(audio_dir)\n",

+ " \n",

+ " if already_exists and not overwrite:\n",

+ " print(\"Data already downloaded\")\n",

+ " return\n",

+ " \n",

+ " if already_exists:\n",

+ " print(\"Deleting previously downloaded audio\")\n",

+ " shutil.rmtree(audio_dir)\n",

+ "\n",

+ " os.mkdir(audio_dir)\n",

+ " download_parallel(args)\n",

+ "\n",

+ "def remove_punctuation(str):\n",

+ " return str.translate(str.maketrans('', '', string.punctuation))\n",

+ " \n",

+ "def audio_duration(fn):\n",

+ " f = sf.SoundFile(fn)\n",

+ " return f.frames / f.samplerate\n",

+ "\n",

+ "def download_file(args):\n",

+ " url, filename = args[0], args[1]\n",

+ "\n",

+ " try:\n",

+ " response = requests.get(url)\n",

+ " open(os.path.join(audio_dir, filename), \"wb\").write(response.content)\n",

+ " return filename, True\n",

+ " except:\n",

+ " return filename, False\n",

+ "\n",

+ "def download_parallel(args):\n",

+ " results = ThreadPool(download_threads).imap_unordered(download_file, args)\n",

+ " for result in results:\n",

+ " if result[1]:\n",

+ " print(bcolors.OKGREEN + \"[\" + u'\\u2713' + \"] \" + bcolors.ENDC + result[0])\n",

+ " else:\n",

+ " print(bcolors.FAIL + \"[\" + u'\\u2715' + \"] \" + bcolors.ENDC + result[0])\n",

+ "\n",

+ "def main():\n",

+ " r = requests.get(\"https://theportalwiki.com/wiki/GLaDOS_voice_lines\")\n",

+ "\n",

+ " urls = []\n",

+ " filenames = []\n",

+ " texts = []\n",

+ "\n",

+ " soup = BeautifulSoup(r.text.encode('utf-8').decode('ascii', 'ignore'), 'html.parser')\n",

+ " for link_item in soup.find_all('a'):\n",

+ " url = link_item.get(\"href\", None)\n",

+ " if url:\n",

+ " if \"https:\" in url and \".wav\" in url:\n",

+ " list_item = link_item.find_parent(\"li\")\n",

+ " ital_item = list_item.find_all('i')\n",

+ " if ital_item:\n",

+ " text = ital_item[0].text\n",

+ " text = text.replace('\"', '')\n",

+ " filename = url[url.rindex(\"/\")+1:]\n",

+ "\n",

+ " if \"[\" not in text and \"]\" not in text and \"$\" not in text:\n",

+ " if url not in urls:\n",

+ " for s in blocklist:\n",

+ " if s in url:\n",

+ " break\n",

+ " else:\n",

+ " urls.append(url)\n",

+ " filenames.append(filename)\n",

+ " text = text.replace('*', '')\n",

+ " texts.append(text)\n",

+ "\n",

+ " print(\"Found \" + str(len(urls)) + \" urls\")\n",

+ "\n",

+ " args = zip(urls, filenames)\n",

+ "\n",

+ " prep(args)\n",

+ " \n",

+ " total_audio_time = 0\n",

+ " outFile=open(os.path.join(audio_dir, \"manifest.json\"), 'w')\n",

+ " for i in range(len(urls)):\n",

+ " item = {}\n",

+ " text = texts[i]\n",

+ " filename = filenames[i]\n",

+ " item[\"audio_filepath\"] = filename\n",

+ " item[\"text_normalized\"] = re.sub(r\"(\\d+)\", lambda x: num2words.num2words(int(x.group(0))), text)\n",

+ " item[\"text\"] = text.lower()\n",

+ " item[\"duration\"] = audio_duration(os.path.join(audio_dir, filename))\n",

+ " total_audio_time = total_audio_time + item[\"duration\"]\n",

+ " outFile.write(json.dumps(item, ensure_ascii=True, sort_keys=True) + \"\\n\")\n",

+ " \n",

+ " outFile.close()\n",

+ " print(str(total_audio_time/60.0) + \" min\")\n",

+ "\n",

+ "main()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "! wget https://data.keithito.com/data/speech/LJSpeech-1.1.tar.bz2\n",

+ "! tar -xf LJSpeech-1.1.tar.bz2\n",

+ "! mv LJSpeech-1.1 tmp/data/LJSpeech\n",

+ "! rm LJSpeech-1.1.tar.bz2"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "! tao spectro_gen dataset_convert \\\n",

+ " -e $SPECS_DIR/spectro_gen/dataset_convert_ljs.yaml \\\n",

+ " -r $RESULTS_DIR/spectro_gen/dataset_convert \\\n",

+ " data_dir=$DATA_DIR/LJSpeech \\\n",

+ " dataset_name=ljspeech"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "original_data_csv = os.path.join(os.environ[\"HOST_DATA_DIR\"], \"LJSpeech/metadata.csv\")\n",

+ "original_data_json = os.path.join(os.environ[\"HOST_DATA_DIR\"], \"LJSpeech/ljspeech_train.json\")\n",

+ "\n",

+ "original_data_name = \"LJSpeech\"\n",

+ "os.environ[\"finetune_data_name\"] = original_data_name\n",

+ "\n",

+ "os.environ[\"original_data_json\"] = original_data_json\n",

+ "print(original_data_json)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "Let's now download the data from the NVIDIA Custom Voice Recorder tool, and place the data in the `$HOST_DATA_DIR`"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "\n",

+ "# Name of the untarred dataset from the NVIDIA Custom Voice Recorder.\n",

+ "finetune_data_name = \"GLaDOS\"\n",

+ "os.environ[\"finetune_data_name\"] = finetune_data_name\n",

+ "finetune_data_path = os.path.join(os.getenv(\"HOST_DATA_DIR\"), finetune_data_name)\n",

+ "print(finetune_data_path)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "Now that you have downloaded the data, let's make sure that the audio clips and sample at the same sampling frequency as the clips used to train the pretrained model. For the course of this notebook, NVIDIA recommends using a model trained on the LJSpeech dataset. The sampling rate for this model is 22.05kHz."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "scrolled": false

+ },

+ "outputs": [],

+ "source": [

+ "import soundfile\n",

+ "import librosa\n",

+ "import json\n",

+ "import os\n",

+ "\n",

+ "print(librosa.__version__)\n",

+ "def resample_audio(input_file_path, output_path, target_sampling_rate=22050):\n",

+ " \"\"\"Resample a single audio file.\n",

+ " \n",

+ " Args:\n",

+ " input_file_path (str): Path to the input audio file.\n",

+ " output_path (str): Path to the output audio file.\n",

+ " target_sampling_rate (int): Sampling rate for output audio file.\n",

+ " \n",

+ " Returns:\n",

+ " No explicit returns\n",

+ " \"\"\"\n",

+ " if not input_file_path.endswith(\".wav\"):\n",

+ " raise NotImplementedError(\"Loading only implemented for wav files.\")\n",

+ " if not os.path.exists(input_file_path):\n",

+ " raise FileNotFoundError(f\"Cannot file input file at {input_file_path}\")\n",

+ " audio, sampling_rate = librosa.load(\n",

+ " input_file_path,\n",

+ " sr=target_sampling_rate\n",

+ " )\n",

+ " filename = os.path.basename(input_file_path)\n",

+ " if not os.path.exists(output_path):\n",

+ " os.makedirs(output_path)\n",

+ " soundfile.write(\n",

+ " os.path.join(output_path, filename),\n",

+ " audio,\n",

+ " samplerate=target_sampling_rate,\n",

+ " format=\"wav\"\n",

+ " )\n",

+ " return filename"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from tqdm.notebook import tqdm\n",

+ "\n",

+ "relative_path = f\"{finetune_data_name}/clips_resampled\"\n",

+ "resampled_manifest_file = os.path.join(\n",

+ " os.environ[\"HOST_DATA_DIR\"],\n",

+ " f\"{finetune_data_name}/manifest_resampled.json\"\n",

+ ")\n",

+ "input_manifest_file = os.path.join(\n",

+ " os.environ[\"HOST_DATA_DIR\"],\n",

+ " f\"{finetune_data_name}/manifest.json\"\n",

+ ")\n",

+ "sampling_rate = 22050\n",

+ "output_path = os.path.join(os.environ[\"HOST_DATA_DIR\"], relative_path)\n",

+ "\n",

+ "print(\"########################################\")\n",

+ "print(\"resampled_manifest_file: \" + resampled_manifest_file)\n",

+ "print(\"input_manifest_file: \" + input_manifest_file)\n",

+ "print(\"output_path: \" + output_path)\n",

+ "print(\"########################################\")\n",

+ "\n",

+ "# Resampling the audio clip.\n",

+ "with open(input_manifest_file, \"r\") as finetune_file:\n",

+ " with open(resampled_manifest_file, \"w\") as resampled_file:\n",

+ " for line in tqdm(finetune_file.readlines()):\n",

+ " data = json.loads(line)\n",

+ " filename = resample_audio(\n",

+ " os.path.join(\n",

+ " os.environ[\"HOST_DATA_DIR\"],\n",

+ " finetune_data_name,\n",

+ " data[\"audio_filepath\"]\n",

+ " ),\n",

+ " output_path,\n",

+ " target_sampling_rate=sampling_rate\n",

+ " )\n",

+ " data[\"audio_filepath\"] = os.path.join(\n",

+ " os.environ[\"DATA_DIR\"],\n",

+ " relative_path, filename\n",

+ " )\n",

+ " resampled_file.write(f\"{json.dumps(data)}\\n\")\n",

+ "\n",

+ "assert resampled_file.closed, \"Output file wasn't closed properly\"\n",

+ "assert finetune_file.closed, \"Input file wasn't closed properly\""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Splitting the dataset to train and val set.\n",

+ "!cat $HOST_DATA_DIR/$finetune_data_name/manifest_resampled.json | tail -n 2 > $HOST_DATA_DIR/$finetune_data_name/manifest_val.json\n",

+ "!cat $HOST_DATA_DIR/$finetune_data_name/manifest_resampled.json | head -n -2 > $HOST_DATA_DIR/$finetune_data_name/manifest_train.json"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from pathlib import Path\n",

+ "\n",

+ "finetune_data_json = Path(DATA_DIR) / f'{finetune_data_name}/manifest_train.json'\n",

+ "original_data_json = Path(DATA_DIR) / f'{original_data_name}/ljspeech_train.json'"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "The first step is to create a json that contains data from both the original data and the finetuning data. We can do this using dataset_convert:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "! tao spectro_gen dataset_convert \\\n",

+ " dataset_name=merge \\\n",

+ " original_json=$original_data_json \\\n",

+ " finetune_json=$finetune_data_json \\\n",

+ " save_path=$DATA_DIR/$finetune_data_name/merged_train.json \\\n",

+ " -r $DATA_DIR/dataset_convert/merge \\\n",

+ " -e $SPECS_DIR/spectro_gen/dataset_convert_ljs.yaml"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "#! sed -i 's/\"speaker\":/\"speaker_id\":/g' $HOST_DATA_DIR/$finetune_data_name/merged_train.json"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### Getting Pitch Statistics\n",

+ "\n",

+ "Training Fastpitch requires you to set 4 values for pitch extraction:\n",

+ " - `fmin`: The minimum frequence value in Hz used to estimate the fundamental frequency (f0)\n",

+ " - `fmax`: The maximum frequency value in Hz used to estimate the fundamental frequency (f0)\n",

+ " - `avg`: The average used to normalize the pitch\n",

+ " - `std`: The std deviation used to normalize the pitch\n",

+ "\n",

+ "In order to get these, we first find a good `fmin` and `fmax` which are hyperparameters to librosa's pyin function.\n",

+ "After we set those, we can iterate over the finetuning dataset to extract the pitch mean and standard deviation.\n",

+ "\n",

+ "#### Obtain fmin and fmax\n",

+ "\n",

+ "To get fmin and fmax, we start with some defaults, and iterate through random samples of the dataset to ensure that pyin is correctly extracting the pitch.\n",

+ "\n",

+ "We look at the plotted spectrogram as well as the predicted fundamental frequency, f0. We want the predicted f0 (the cyan line) to match the lowest energy band in the spectrogram. Here is an example of a good match between the predicted f0 and the spectrogram:\n",

+ "\n",

+ "\n",

+ "\n",

+ "Here is an example of a bad match between the f0 and the spectrogram. The fmin was likely set too high. The f0 algorithm is missing the first two vocalizations, and is correctly matching the last half of speech. To fix this, the fmin should be set lower.\n",

+ "\n",

+ "\n",

+ "\n",

+ "Here is an example of samples that have low frequency noise. In order to eliminate the effects of noise, you have to set fmin above the noise frequency. Unfortunately, this will result in degraded TTS quality. It would be best to re-record the data in a environment with less noise.\n",

+ "\n",

+ "\n",

+ "\n",

+ "\n",

+ "*Note: You will have to run the below cell multiple times with different hyperparameters before you are able to find a good value for fmin and fmax.*\n",

+ "\n",

+ "*We set the `num_files` parameter to 10, so as to visualize only 10 plots in the dataset. You may choose to increase or decrease this value to generate more or fewer plots*"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import matplotlib.pyplot as plt\n",

+ "%matplotlib inline\n",

+ "import os\n",

+ "from math import ceil\n",

+ "from IPython.display import Image\n",

+ "\n",

+ "valid_image_ext = ['.jpg', '.png', '.jpeg', '.ppm']\n",

+ "\n",

+ "def visualize_images(image_dir, num_cols=2, num_images=10):\n",

+ " \"\"\"Visualize images in the notebook.\n",

+ " \n",

+ " Args:\n",

+ " image_dir (str): Path to the directory containing images.\n",

+ " num_cols (int): Number of columns.\n",

+ " num_images (int): Number of images.\n",

+ "\n",

+ " \"\"\"\n",

+ " output_path = os.path.join(os.environ['HOST_RESULTS_DIR'], image_dir)\n",

+ " num_rows = int(ceil(float(num_images) / float(num_cols)))\n",

+ " f, axarr = plt.subplots(num_rows, num_cols, figsize=[240,90])\n",

+ " f.tight_layout()\n",

+ " a = [os.path.join(output_path, image) for image in os.listdir(output_path) \n",

+ " if os.path.splitext(image)[1].lower() in valid_image_ext]\n",

+ " for idx, img_path in enumerate(a[:num_images]):\n",

+ " col_id = idx % num_cols\n",

+ " row_id = idx // num_cols\n",

+ " img = plt.imread(img_path)\n",

+ " axarr[row_id, col_id].imshow(img)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "scrolled": true

+ },

+ "outputs": [],

+ "source": [

+ "# Holy wow this takes FOREVER\n",

+ "!tao spectro_gen pitch_stats num_files=10 \\\n",

+ " pitch_fmin=80 \\\n",

+ " pitch_fmax=2048 \\\n",

+ " output_path=/results/spectro_gen/pitch_stats \\\n",

+ " compute_stats=false \\\n",

+ " render_plots=true \\\n",

+ " manifest_filepath=$DATA_DIR/$finetune_data_name/manifest_train.json \\\n",

+ " --results_dir $RESULTS_DIR/spectro_gen/pitch_stats"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "scrolled": true

+ },

+ "outputs": [],

+ "source": [

+ "visualize_images(\"spectro_gen/pitch_stats\", num_cols=5, num_images=10)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### Finetuning\n",

+ "\n",

+ "For finetuning TTS models in TAO, we use the `tao spectro_gen finetune` and `tao vocoder finetune` command with the following args:\n",

+ "\n",

+ " - -m : Path to the model weights we want to finetune from

\n",

+ " - -e : Path to the spec file

\n",

+ " - -g : Number of GPUs to use

\n",

+ " - -r : Path to the results folder

\n",

+ " - -k : User specified encryption key to use while saving/loading the model

\n",

+ " - Any overrides to the spec file

\n",

+ "

\n",

+ "\n",

+ "In order to get a finetuned TTS pipeline, you need to finetune FastPitch. For best results, you need to finetune HiFiGAN as well."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Finetuning FastPitch"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "scrolled": true

+ },

+ "outputs": [],

+ "source": [

+ "# Prior is needed for FastPitch training. If empty folder is provided, prior will generate on-the-fly\n",

+ "! mkdir -p $HOST_RESULTS_DIR/spectro_gen/finetune/prior_folder"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Please set the fmin, fmax, pitch_mean and pitch_std values based on\n",

+ "# the output from the tao spectro_gen pitch_stats task.\n",

+ "pitch_fmin = 80.0\n",

+ "pitch_fmax = 2048.0\n",

+ "pitch_mean = 165.458\n",

+ "pitch_std = 40.1891\n",

+ "\n",

+ "print(f\"pitch fmin:{pitch_fmin}\")\n",

+ "print(f\"pitch fmax:{pitch_fmax}\")\n",

+ "print(f\"pitch mean:{pitch_mean}\")\n",

+ "print(f\"pitch std:{pitch_std}\")\n",

+ "\n",

+ "os.environ[\"pitch_fmin\"] = str(pitch_fmin)\n",

+ "os.environ[\"pitch_fmax\"] = str(pitch_fmax)\n",

+ "os.environ[\"pitch_mean\"] = str(pitch_mean)\n",

+ "os.environ[\"pitch_std\"] = str(pitch_std)\n",

+ "\n",

+ "assert pitch_fmin < pitch_fmax , f\"pitch_fmin [{pitch_fmin}] > pitch_fmax [{pitch_fmax}]\""

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "Please be patient especially if you provided an empty prior folder.\n",

+ "\n",

+ "Please update the `-m` parameter to the path of your pre-trained checkpoint. This can be a previously trained `.tlt` or `.nemo` file.\n",

+ "\n",

+ "NVIDIA recommends using these [FastPitch](https://catalog.ngc.nvidia.com/orgs/nvidia/teams/nemo/models/tts_en_fastpitch) and [HiFiGAN](https://catalog.ngc.nvidia.com/orgs/nvidia/teams/nemo/models/tts_hifigan) checkpoints on [NGC](https://ngc.nvidia.com)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Installing NGC CLI to pull the models.\n",

+ "## Download and install\n",

+ "import os\n",

+ "\n",

+ "%env CLI=ngccli_cat_linux.zip\n",

+ "!mkdir -p $HOST_RESULTS_DIR/ngccli\n",

+ "\n",

+ "# Remove any previously existing CLI installations\n",

+ "!rm -rf $HOST_RESULTS_DIR/ngccli/*\n",

+ "!wget \"https://ngc.nvidia.com/downloads/$CLI\" -P $HOST_RESULTS_DIR/ngccli\n",

+ "!unzip -u \"$HOST_RESULTS_DIR/ngccli/$CLI\" -d $HOST_RESULTS_DIR/ngccli/\n",

+ "!rm $HOST_RESULTS_DIR/ngccli/*.zip \n",

+ "os.environ[\"PATH\"]=\"{}/ngccli/ngc-cli:{}\".format(os.getenv(\"HOST_RESULTS_DIR\", \"\"), os.getenv(\"PATH\", \"\"))\n",

+ "\n",

+ "#!ngc registry model download-version \"nvidia/nemo/tts_en_fastpitch:1.0.0\" --dest $HOST_DATA_DIR/\n",

+ "!ngc registry model download-version \"nvidia/nemo/tts_en_fastpitch:1.4.0\" --dest $HOST_DATA_DIR/\n",

+ "#!ngc registry model download-version \"nvidia/nemo/tts_en_fastpitch:1.8.1\" --dest $HOST_DATA_DIR/\n",

+ "!ngc registry model download-version \"nvidia/nemo/tts_hifigan:1.0.0rc1\" --dest $HOST_DATA_DIR/"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "pretrained_fastpitch_model = os.path.join(os.environ[\"DATA_DIR\"], \"tts_en_fastpitch_v1.4.0/tts_en_fastpitch_align.nemo\")\n",

+ "os.environ[\"pretrained_fastpitch_model\"] = pretrained_fastpitch_model\n",

+ "pretrained_hifigan_model = os.path.join(os.environ[\"DATA_DIR\"], \"tts_hifigan_v1.0.0rc1/tts_hifigan.nemo\")\n",

+ "os.environ[\"pretrained_hifigan_model\"] = pretrained_hifigan_model\n",

+ "os.environ[\"HYDRA_FULL_ERROR\"]=\"1\""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!tao spectro_gen finetune \\\n",

+ " -e $SPECS_DIR/spectro_gen/finetune.yaml \\\n",

+ " -g 1 \\\n",

+ " -k tlt_encode \\\n",

+ " -r $RESULTS_DIR/spectro_gen/finetune \\\n",

+ " -m $pretrained_fastpitch_model \\\n",

+ " train_dataset=$DATA_DIR/$finetune_data_name/merged_train.json \\\n",

+ " validation_dataset=$DATA_DIR/$finetune_data_name/manifest_val.json \\\n",

+ " prior_folder=$RESULTS_DIR/spectro_gen/finetune/prior_folder \\\n",

+ " trainer.max_epochs=200 \\\n",

+ " n_speakers=2 \\\n",

+ " pitch_fmin=$pitch_fmin \\\n",

+ " pitch_fmax=$pitch_fmax \\\n",

+ " pitch_avg=$pitch_mean \\\n",

+ " pitch_std=$pitch_std \\\n",

+ " trainer.precision=16"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Finetuning HiFiGAN\n",

+ "\n",

+ "In order to get the best audio from HiFiGAN, we need to finetune it:\n",

+ " - on the new speaker\n",

+ " - using mel spectrograms from our finetuned FastPitch Model\n",

+ "\n",

+ "Let's first generate mels from our FastPitch model, and save it to a new .json manifest for use with HiFiGAN"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import json\n",

+ "import os\n",

+ "\n",

+ "def infer_and_save_json(infer_json, save_json, subdir=\"train\"):\n",

+ " # Get records from the training manifest\n",

+ " host_manifest_path = os.path.join(os.environ[\"HOST_DATA_DIR\"], infer_json)\n",

+ " tao_manifest_path = os.path.join(DATA_DIR, infer_json)\n",

+ " host_save_json = os.path.join(os.environ[\"HOST_DATA_DIR\"], save_json)\n",

+ " records = []\n",

+ " text = {\"input_batch\": []}\n",

+ " print(\"Appending mel spectrogram paths to the dataset.\")\n",

+ " with open(host_manifest_path, \"r\") as f:\n",

+ " for i, line in enumerate(f):\n",

+ " manifest_info = json.loads(line)\n",

+ " manifest_info[\"mel_filepath\"] = f\"{RESULTS_DIR}/spectro_gen/infer/spectro/{subdir}/{i}.npy\"\n",

+ " records.append(manifest_info)\n",

+ " text[\"input_batch\"].append(manifest_info[\"text\"])\n",

+ "\n",

+ " !tao spectro_gen infer \\\n",

+ " -e $SPECS_DIR/spectro_gen/infer.yaml \\\n",

+ " -g 1 \\\n",

+ " -k $KEY \\\n",

+ " -m $RESULTS_DIR/spectro_gen/finetune/checkpoints/finetuned-model.tlt \\\n",

+ " -r $RESULTS_DIR/spectro_gen/infer \\\n",

+ " output_path=$RESULTS_DIR/spectro_gen/infer/spectro/$subdir \\\n",

+ " speaker=1 \\\n",

+ " mode=\"infer_hifigan_ft\" \\\n",

+ " input_json=$tao_manifest_path\n",

+ "\n",

+ " # Save to a new json\n",

+ " with open(host_save_json, \"w\") as f:\n",

+ " for r in records:\n",

+ " f.write(json.dumps(r) + '\\n')\n",

+ "\n",

+ "# Infer for train\n",

+ "infer_and_save_json(f\"{finetune_data_name}/manifest_train.json\", f\"{finetune_data_name}/hifigan_train_ft.json\")\n",

+ "# Infer for dev\n",

+ "infer_and_save_json(f\"{finetune_data_name}/manifest_val.json\", f\"{finetune_data_name}/hifigan_dev_ft.json\", \"dev\")"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "Now let's finetune hifigan.\n",

+ "\n",

+ "Please update the `-m` parameter to the path of your pre-trained checkpoint."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "scrolled": true

+ },

+ "outputs": [],

+ "source": [

+ "!tao vocoder finetune \\\n",

+ " -e $SPECS_DIR/vocoder/finetune.yaml \\\n",

+ " -g 1 \\\n",

+ " -k $KEY \\\n",

+ " -r $RESULTS_DIR/vocoder/finetune \\\n",

+ " -m $pretrained_hifigan_model \\\n",

+ " train_dataset=$DATA_DIR/$finetune_data_name/hifigan_train_ft.json \\\n",

+ " validation_dataset=$DATA_DIR/$finetune_data_name/hifigan_dev_ft.json \\\n",

+ " trainer.max_epochs=200"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### TTS Inference"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "As aforementioned, since there are no universal standard to measure quality of synthesized speech, you will need to listen to some inferred speech to tell whether a TTS model is well trained. Therefore, we do not provide `evaluate` functionality in TAO Toolkit for TTS but only provide `infer` functionality."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Generate spectrogram\n",

+ "\n",

+ "The first step for inference is generating spectrogram. That's a numpy array (saved as `.npy` file) for a sentence which can be converted to voice by a vocoder. We use FastPitch we just trained to generate spectrogram\n",

+ "\n",

+ "You might have to work with the infer.yaml file to set the texts you want for inference"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!tao spectro_gen infer \\\n",

+ " -e $SPECS_DIR/spectro_gen/infer.yaml \\\n",

+ " -g 1 \\\n",

+ " -k $KEY \\\n",

+ " -m $RESULTS_DIR/spectro_gen/finetune/checkpoints/finetuned-model.tlt \\\n",

+ " -r $RESULTS_DIR/spectro_gen/infer_output \\\n",

+ " output_path=$RESULTS_DIR/spectro_gen/infer_output/spectro \\\n",

+ " speaker=1"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Generate sound file\n",

+ "\n",

+ "The second step for inference is generating wav sound file based on spectrogram you generated in last step."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "scrolled": false

+ },

+ "outputs": [],

+ "source": [

+ "!tao vocoder infer \\\n",

+ " -e $SPECS_DIR/vocoder/infer.yaml \\\n",

+ " -g 1 \\\n",

+ " -k $KEY \\\n",

+ " -m $RESULTS_DIR/vocoder/finetune/checkpoints/finetuned-model.tlt \\\n",

+ " -r $RESULTS_DIR/vocoder/infer_output \\\n",

+ " input_path=$RESULTS_DIR/spectro_gen/infer_output/spectro \\\n",

+ " output_path=$RESULTS_DIR/vocoder/infer_output/wav"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "import IPython.display as ipd\n",

+ "# change path of the file here\n",

+ "ipd.Audio(os.environ[\"HOST_RESULTS_DIR\"] + '/vocoder/infer_output/wav/0.wav')\n",

+ "# ipd.Audio(os.environ[\"HOST_RESULTS_DIR\"] + '/vocoder/infer_output/wav/1.wav')\n",

+ "# ipd.Audio(os.environ[\"HOST_RESULTS_DIR\"] + '/vocoder/infer_output/wav/2.wav')"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Debug\n",

+ "\n",

+ "The data provided is only meant to be a sample to understand how finetuning works in TAO. In order to generate better speech quality, we recommend recording at least 30 mins of audio, and increasing the number of finetuning steps from the current `trainer.max_steps=1000` to `trainer.max_steps=5000` for both models."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### TTS model export\n",

+ "\n",

+ "With TAO, you can also export your model in a format that can deployed using Nvidia Riva, a highly performant application framework for multi-modal conversational AI services using GPUs! The same command for exporting to ONNX can be used here. The only small variation is the configuration for `export_format` in the spec file!"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Export to ONNX\n",

+ "\n",

+ "Executing the snippets in the cells below, allows you to generate a `.riva` model file for the spectrogram generator and vocoder models that were trained the preceding cells. These models are required to generate a complete Text-To-Speech pipeline."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!tao spectro_gen export \\\n",

+ " -e $SPECS_DIR/spectro_gen/export.yaml \\\n",

+ " -g 1 \\\n",

+ " -k $KEY \\\n",

+ " -m $RESULTS_DIR/spectro_gen/finetune/checkpoints/finetuned-model.tlt \\\n",

+ " -r $RESULTS_DIR/spectro_gen/export \\\n",

+ " export_format=ONNX \\\n",

+ " export_to=spectro_gen.eonnx"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!tao vocoder export \\\n",

+ " -e $SPECS_DIR/vocoder/export.yaml \\\n",

+ " -g 1 \\\n",

+ " -k $KEY \\\n",

+ " -m $RESULTS_DIR/vocoder/finetune/checkpoints/finetuned-model.tlt \\\n",

+ " -r $RESULTS_DIR/vocoder/export \\\n",

+ " export_format=ONNX \\\n",

+ " export_to=vocoder.eonnx"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Export to RIVA\n",

+ "\n",

+ "Executing the snippets in the cells below, allows you to generate a `.riva` model file for the spectrogram generator and vocoder models that were trained the preceding cells. These models are required to generate a complete Text-To-Speech pipeline.\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!tao spectro_gen export \\\n",

+ " -e $SPECS_DIR/spectro_gen/export.yaml \\\n",

+ " -g 1 \\\n",

+ " -k $KEY \\\n",

+ " -m $RESULTS_DIR/spectro_gen/finetune/checkpoints/finetuned-model.tlt \\\n",

+ " -r $RESULTS_DIR/spectro_gen/export \\\n",

+ " export_format=RIVA \\\n",

+ " export_to=spectro_gen.riva"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!tao vocoder export \\\n",

+ " -e $SPECS_DIR/vocoder/export.yaml \\\n",

+ " -g 1 \\\n",

+ " -k $KEY \\\n",

+ " -m $RESULTS_DIR/vocoder/finetune/checkpoints/finetuned-model.tlt \\\n",

+ " -r $RESULTS_DIR/vocoder/export \\\n",

+ " export_format=RIVA \\\n",

+ " export_to=vocoder.riva"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### TTS Inference using ONNX\n",

+ "\n",

+ "TAO provides the capability to use the exported .eonnx model for inference. The commands are very similar to the inference command for .tlt models. Again, the inputs in the spec file used is just for demo purposes, you may choose to try out your custom input!"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Generate spectrogram\n",

+ "\n",

+ "The first step for inference is generating spectrogram. That's a numpy array (saved as `.npy` file) for a sentence which can be converted to voice by a vocoder. We use FastPitch we just trained to generate spectrogram\n",

+ "\n",

+ "You might have to work with the infer.yaml file to set the texts you want for inference"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!tao spectro_gen infer_onnx \\\n",

+ " -e $SPECS_DIR/spectro_gen/infer.yaml \\\n",

+ " -g 1 \\\n",

+ " -k $KEY \\\n",

+ " -m $RESULTS_DIR/spectro_gen/export/spectro_gen.eonnx \\\n",

+ " -r $RESULTS_DIR/spectro_gen/infer_onnx \\\n",

+ " output_path=$RESULTS_DIR/spectro_gen/infer_onnx/spectro \\\n",

+ " speaker=1"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Generate sound file\n",

+ "\n",

+ "The second step for inference is generating wav sound file based on spectrogram you generated in last step."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!tao vocoder infer_onnx \\\n",

+ " -e $SPECS_DIR/vocoder/infer.yaml \\\n",

+ " -g 1 \\\n",

+ " -k $KEY \\\n",

+ " -m $RESULTS_DIR/vocoder/export/vocoder.eonnx \\\n",

+ " -r $RESULTS_DIR/vocoder/infer_onnx \\\n",

+ " input_path=$RESULTS_DIR/spectro_gen/infer_onnx/spectro \\\n",

+ " output_path=$RESULTS_DIR/vocoder/infer_onnx/wav"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "If everything works properly, wav file below should sound exactly same as wav file in previous section"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "import IPython.display as ipd\n",

+ "\n",

+ "# change path of the file here\n",

+ "ipd.Audio(os.environ[\"HOST_RESULTS_DIR\"] + '/vocoder/infer_onnx/wav/0.wav')\n",

+ "# ipd.Audio(os.environ[\"HOST_RESULTS_DIR\"] + '/vocoder/infer_onnx/wav/1.wav')\n",

+ "# ipd.Audio(os.environ[\"HOST_RESULTS_DIR\"] + '/vocoder/infer_onnx/wav/2.wav')"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### What's Next ?\n",

+ "\n",

+ " You could use TAO to build custom models for your own applications, and deploy them to Nvidia Riva! To try deploying these models to RIVA, use the [text-to-speech-deployment.ipynb](https://catalog.ngc.nvidia.com/orgs/nvidia/teams/tao/resources/texttospeech_notebook) as a quick sample."

+ ]

+ }

+ ],

+ "metadata": {

+ "interpreter": {

+ "hash": "741d73fab70d7eb29e7b56260ebaa567f0620f4d2780830ca385f600e5120e14"

+ },

+ "kernelspec": {

+ "display_name": "Python 3 (ipykernel)",

+ "language": "python",

+ "name": "python3"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.8.10"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 4

+}

diff --git a/download_audio.py b/download_audio.py

index 0609142..7d6975a 100755

--- a/download_audio.py

+++ b/download_audio.py

@@ -1,5 +1,3 @@

-#!/usr/bin/env python3

-

import requests

from multiprocessing import cpu_count

from multiprocessing.pool import ThreadPool

@@ -9,6 +7,8 @@ from bs4 import BeautifulSoup

import soundfile as sf

import string

import json

+import re

+import num2words

class bcolors:

HEADER = '\033[95m'

@@ -25,12 +25,19 @@ blocklist = ["potato", "_ding_", "00_part1_entry-6"]

audio_dir = 'audio'

download_threads = 64

-def prep():

- if os.path.exists(audio_dir):

+def prep(args, overwrite=False):

+ already_exists = os.path.exists(audio_dir)

+

+ if already_exists and not overwrite:

+ print("Data already downloaded")

+ return

+

+ if already_exists:

print("Deleting previously downloaded audio")

shutil.rmtree(audio_dir)

os.mkdir(audio_dir)

+ download_parallel(args)

def remove_punctuation(str):

return str.translate(str.maketrans('', '', string.punctuation))

@@ -64,7 +71,7 @@ def main():

filenames = []

texts = []

- soup = BeautifulSoup(r.text, 'html.parser')

+ soup = BeautifulSoup(r.text.encode('utf-8').decode('ascii', 'ignore'), 'html.parser')

for link_item in soup.find_all('a'):

url = link_item.get("href", None)

if url:

@@ -84,32 +91,37 @@ def main():

else:

urls.append(url)

filenames.append(filename)

+ text = text.replace('*', '')

+ text = re.sub(r"(\d+)", lambda x: num2words.num2words(int(x.group(0))), text)

texts.append(text)

print("Found " + str(len(urls)) + " urls")

args = zip(urls, filenames)

- prep()

- download_parallel(args)

+ prep(args)

#{"audio_filepath": "audio/nada_lily_21_haggard_0316.wav",

#"text": "awake ye kings",

#"duration": 1.3,

#"text_no_preprocessing": "\u201cAwake, ye kings,\u201d",

#"text_normalized": "\"Awake, ye kings,\""}

+

+ total_audio_time = 0

outFile=open(os.path.join(audio_dir, "manifest.json"), 'w')

for i in range(len(urls)):

item = {}

text = texts[i]

filename = filenames[i]

- item["audio_filepath"] = filename

+ item["audio_filepath"] = os.path.join(audio_dir, filename)

item["text_normalized"] = text

item["text_no_preprocessing"] = text

item["text"] = text.lower()

item["duration"] = audio_duration(os.path.join(audio_dir, filename))

+ total_audio_time = total_audio_time + item["duration"]

outFile.write(json.dumps(item, ensure_ascii=True, sort_keys=True) + "\n")

outFile.close()

+ print(str(total_audio_time/60.0) + " min")

-main()

+main()

\ No newline at end of file